Cloud Power: The Importance of Interoperability, Part 2

What is Pro AV's best path for standards acceptance?

Last month, we discussed the topic of vendor interoperability in the cloud and the challenges of building ecosystems with different independent software vendor (ISV) products that “talk” to one another. Media technology companies innovate first, engineering products before there are standards that facilitate creating ecosystems with those products.

[The Importance of Interoperability, Part 1]

As systems integrators, we look to create homogeneous systems with multiple vendors’ products in the cloud in the same way we use SDI or ST-2110 for on-prem installs. While not designed initially as an internet protocol, Vizrt’s Network Device Interface (NDI) is currently the most prevalent interface between different vendors for use in the public cloud.

Speed Bumps for Standardization

There are a multitude of considerations when determining the proper protocols for a system in the cloud, including the type of transport to the cloud and output destination (CDN, private network, terrestrial), processing system, complex switched production versus simple distribution, etc.

We’re still in the early days, but I think we’ll see public cloud hyperscaler-oriented standards within the next few years. If we look at Google as an example, they’ve always been a champion of open standards and have the financial and technical ability to drive them forward. Just take a look at Google’s purchase of On2 Technologies for $124.6 million in 2010. The company open sourced the code for its VP8 video codec to create VP9.

The problem we have with standards in this arena today is that the time it takes for organizations to adopt them can take years of negotiation between vendors. With the speed of development typical in the internet industry, that glacial pace won’t cut it when standardizing protocols for the cloud.

[How To Use the Cloud to Deliver SaaS Solutions]

A daily selection of the top stories for AV integrators, resellers and consultants. Sign up below.

We’ve been grappling with questions around this. First, can standards bodies adapt and publish a standard in months not years? Second, how did a commercially successful standard like NDI become so widely used when it was never sanctioned by any standards organization?

The NDI Anomaly

Another approach is illustrated by NDI. NewTek created NDI, then offered it free to everyone, encouraging other vendors to use it. The intent is to generate more sales for NewTek, since its NDI-supported line would be interoperable with more products.

That’s one methodology, but it can be a long shot because only one company has skin in the game. And the competitive companies you want to be involved with as part of the ecosystem likely won’t jump to adopt another company’s tech. The case of NDI’s adoption is unusual because the technology was so compelling, and customers were asking for its implementation. When customers ask vendors, “This NDI interface is great, why aren’t you supporting it?” it speeds implementation pretty fast.

That was the case with Grass Valley’s Agile Media Processing Platform (AMPP) cloud-native live production system. NDI is a key component in ASG’s Virtual Production Control Room (VPCR) ecosystem. We asked Grass Valley to add NDI support to AMPP so it would talk to other products within VPCR. They came back with NDI support in a matter of weeks. It's been the same pattern when we asked other vendors for NDI support.

Association Time?

In discussions with hyperscaler and vendor execs about how to speed protocol standardization, we’ve concluded that the best way to achieve that goal would be through an industry association.

Case in point: The HDMI standard is owned by the HDMI Association. The association is open; any company can join. Most consumer displays have an HDMI port in the back. Interoperability is guaranteed because it is in the TV manufacturer and provider’s best interest to be interoperable with each other.

Imagine if you needed a different set-top box for a Sony TV versus an LG TV or a Samsung, etc.? What would that do to the marketplace? It wouldn't exist. Industry associations can move at the speed they decide is commercially important to react.

The problem we have with standards in this arena today is that the time it takes for organizations to adopt them can take years of negotiation between vendors.

If the HDMI Association comes out with HDMI 1.3, but that standard doesn’t have enough bandwidth for UHD, which is what TV manufacturers need to create market churn with new 4K TVs, it’s in everyone’s commercial interest to collaborate on HDMI 1.4. Then, come the holiday season, you can sell HDMI and new UHD TVs that interface to a set-top box with HDMI 1.4.

I believe this is the methodology under which we're going to see standards evolve for internet and hyperscaler-based production. Industry associations put their money and energy into creating standards on which interoperable products can be based. And they can move at a speed in the best business interest of all involved.

[AVoIP Standards: The Fight to Get It Right]

Interoperability is crucial for both hyperscalers and vendors. The hyperscalers have two competitors: each other and on-prem installations. If there’s a way to make things work on-premises that you can’t in the cloud, your customers are not going to buy your cloud services. And if you’re an ISV and must write different code to run in Google versus AWS versus Azure to make things communicate, hyperscalers have created a disadvantage for themselves. The ISV will select just one company for cloud services.

Vendors have discovered the same need to be interoperable with one another’s technology. Does anyone remember the Panasonic MII format war against the third-party-supported Sony Betacam? Getting a small piece of something is always better than a big piece of nothing.

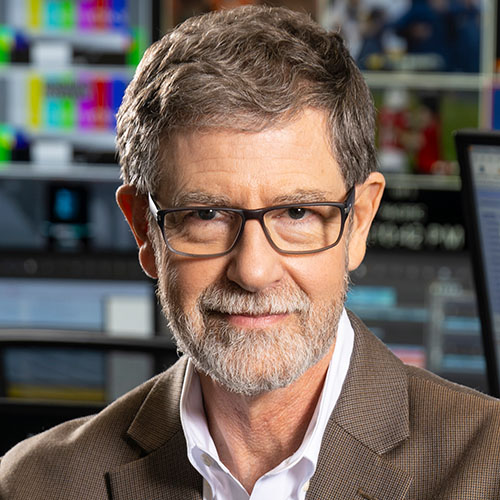

Dave Van Hoy is the president of Advanced Systems Group, LLC.