It has been surprising how quickly video has evolved. Two decades ago, the common understanding was that it could send images in analog form and was delivered from a studio over the air or over cable. One decade ago, we added the idea that it could enhance a voice conference by allowing us to also see others in the conference. But the ability to stream media over the Internet has resulted in the development of adaptive bit rate (ABR) video such as Netflix, Ustream, or the output of a Wowza server.

When viewed from the perspective of how they are transported over the network, IP video first used UDP (User Datagram Protocol) and was very sensitive to loss and jitter. Now, ABR video uses HTTP (Hypertext Transfer Protocol) over TCP (Transport Control Protocol). The impact of loss and jitter is far more difficult to assess. In fact, it’s often impossible to detect. That makes it an ideal transport method for use with the Internet.

Let’s consider HTTP. The early versions were designed to make it easy to request text files from a server somewhere in the World Wide Web. Very quickly, however, the need to request images, icons, executable scripts and other media became apparent. The first version to do that was HTTP 1.0. It wasn’t very efficient because each request for one of the objects required a separate TCP session. The overhead was exceptionally high when compared to bulk file transfers or traffic such as VoIP. To eliminate this overhead, the most popular current version, HTTP 1.1 was introduced. It allows for multiple requests and responses within a single TCP session. Yet, we haven’t achieved the high level of performance that users desire. According to the text High Performance Browser Networking: What Every Web Developer Should Know (O’Reilly Media, 2013), an average web request involved 90 separate retrievals of HTML content, scripts, images, and other objects. Nevertheless, developers favor HTTP 1.1 because it is supported by every browser and passes easily through firewalls.

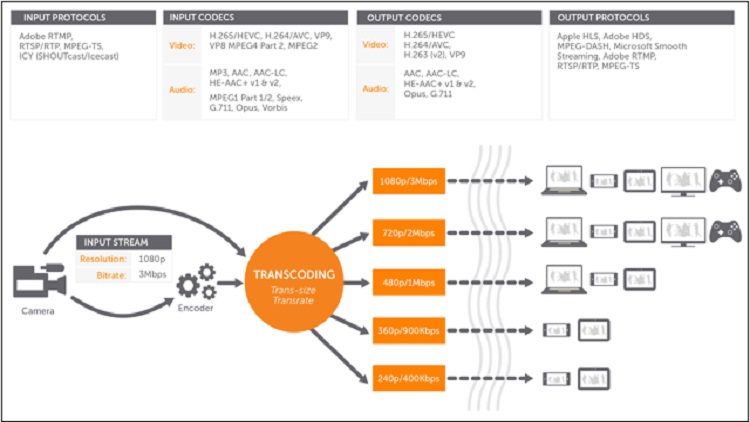

This wide support led Adobe, Apple, and Microsoft to develop a method of delivering video using HTTP/TCP. This is what we call adaptive bit rate video. Each company’s implementation used the following basic method:

1) The client would authenticate to a server and identify the video the user desired to watch.

2) The server would accept the video and deliver a description of the profiles available. Each profile would describe a bit rate, resolution, frame rate and so forth. This description became known as the manifest.

3) The client would choose a profile and request the first “chunk” of video.

DASH (Dynamic Adaptive Streaming over HTTP) is the effort and resulting method that standardizes the general procedure described above. There is growing support for DASH as it promises to allow all clients and servers to use a common technique.

Phil Hippensteel, PhD, is a regular contributor to AV Technology. He teaches information systems at Penn State Harrisburg.