CNN achieves the first remote "holographic" live interview during the coverage of the 2008 U.S. Presidential election.

For the first time on TV, CNN anchor Wolf Blitzer, located in CNN's Election Center in New York, could speak face to face with CNN correspondent Jessica Yellin, who was "beamed" live from Chicago. Jessica's image was projected in 3D

in the CNN studio while she was actually standing hundreds of miles away in a high-tech rig. This technology would be used again for a subsequent interview with musician and songwriter, Will.i.am later in the evening. The effect reminded viewers of Hollywood's biggest sci-fi blockbusters.

Immediately following the broadcast, Vizrt received hundreds of inquiries from all over the world asking how Vizrt's tech wizards made this happen.

CNN senior VP and Washington Bureau chief David Bohrman had been promoting the vision of a live holographic interview for many years. The technological challenge a live, 3D interview presents requires transmitting a large amount of data as well as powering the actual tracking and visualization of the interview subject. No solution to that challenge existed until Vizrt partner, SportVU, developed video treatment technologies that could be combined with Vizrt real-time tracking and rendering software. The combination provided a solution that would allow CNN to transmit a full 3D image of a person over great distances. However, additional challenges remained.

THE SETUP

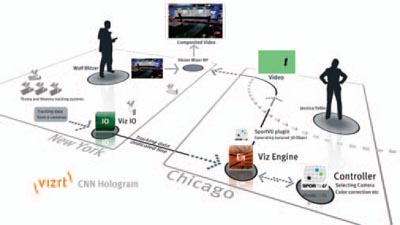

CNN's Election Center in New York is equipped with six tracking cameras. The tracking technology uses a combination of camera dollies and Thoma's handheld Walkfinders. The tracking allows the graphic engines to know where each camera is located and very precisely what it is pointing at.

Hundreds of miles away, two special studios were erected under tents. Each tent contained a semi-circular green rig, covering 220 degrees and 35 HD cameras positioned along the wall. Each camera captured a specific angle of the person standing inside the rig.

HOW DOES IT WORK?

The tracking data from the cameras in the CNN Election Center is processed by Vizrt's Viz Virtual Studio software. More specifically, the Viz IO, a studio configuration and calibration tool, collects all data and converts the camera position and focus into 3D coordinates. The tracking and camera position is then instantly transmitted to the remote location, the "transporter room."

In the

room, the tracking information is processed to identify the required view angle, that is, a sub selection of two of the 35 cameras to use. From the two images captured, a Viz Engine plug-in, specially developed by SportVU, creates a full 3D representation of the person in the rig. The images are blended and the color is corrected to be used as a texture. The 3D model is rendered and textured with the video signal in Viz Engine and sent back to the studio as a full HD video signal.

Back in the studio, the local and remote images are assembled and some special effects added (including the aura) to give the full impression of the holographic interview. All of this is completed in fractions of a second to allow a live playout.

- "We initially considered using the video from only one camera input at a time to re-create the virtual subject," says Ran Yakir, Vizrt's head of R&D Israel, "but we were not happy with the motion quality; the resulting image was simply not smooth enough. SportVU eventually decided to implement an algorithm that used input from the two closest cameras out of the 35 and re-create a full 3D model based on these videos. SportVU's plug-in for Viz Engine is quite revolutionary in that respect."